SpringBoot-Kafka Tutorial

Messages and Events Introduction

what is an event?

In Apache Kafka event is an indication that something has happened. For example, when user logs in to a website, a new event is generated to indicate that a login took place. Similarly, if a new product was created, a microservice can publish a ProductCreatedEvent, or when a new order is placed, an event is generated to indicate that the order has been placed. So events are used to represent changes in the state of application or system. In other words, event is a way of saying that something has changed in your application or in your system.

- 1. UserLoggedInEvent

- 2. ProductCreatedEvent

- 3. OrderCreatedEvent

Naming Convention:

Event names should be in simple past tense. The name will start with a noun, then it will follow by a performed action, and then it will end with a post-fix event.

- For example, when a new product is created, the product's microservice will publish ProductCreatedEvent.

- If the product has been shipped, then we will publish an event that will be called ProductShippedEvent.

- And if we delete the product completely, then we will publish an event that is called ProductDeletedEvent.

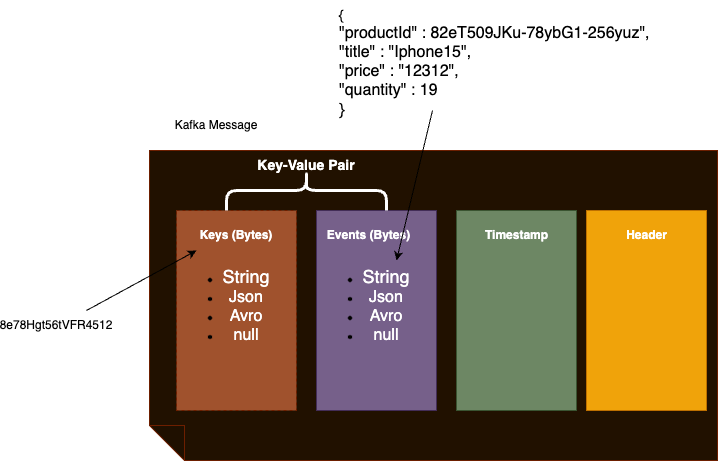

Kafka Message:

Kafka message as a message envelope that carries something inside, and the event data are the content of this message envelope and this event data different formats like shown in above message. It can be simple string value or Json payload format or Avro file or simply null. Basically anything that can be serialized into an array of bytes you can use as a payload. For example, if you choose Json representation, then event payload can look something like shown in above image.

It is a Json representation of a product that was created by products microservice and then published as event data to other microservices that are subscribed to receive this information.

The default size of message payload is one megabyte, which is plenty in most of the cases. But when deciding what information to include into event object, you should keep in mind that Kafka message will be sent over the network. So the larger the size of the message, the slower your system will perform. Ideally, event object should contain all the needed information about the event that the consuming microservice will need. But if the size of the event is becoming very large, then consider optimizing it. For example, if you want to include an image or a video file, then it's better to use a link to that file instead of actually serializing an entire image file. But whatever information you decide to include in your message payload, it will be serialized into an array of bytes by producer microservice. It will then be sent over a network and stored in a topic as a byte array. And when consumer microservice receive this message, the payload will be deserialized into a destination data format.

The second main part of Kafka message is message key, which can also be of different data types, including null value. Whatever data type you choose for message key, its value will be serialized into an array of bytes by producer microservice before storing it in Kafka topic.

So you can think of Kafka message as a key value pair, where both key and the value can be of different data types, including null value. An example of a key could look like alphanumeric string of characters, for example, or it can have a custom format.

The third important part of Kafka message is timestamp. This value can be set by the system, or you can set this value manually. But it is important to have timestamp because there can be use cases where you need to know at what time in the history event took place. Now these are three main and most frequently used parts of Kafka message, but there are other parts that Kafka message can have.

Headers is a list of key value pairs that you can use to include additional metadata information to your Kafka message. For example, you can include authorization header that the destination microservice might need to access user protected resources, but headers is optional element and you might never use it.