SpringBoot-Kafka Tutorial

Kafka Topic Introduction

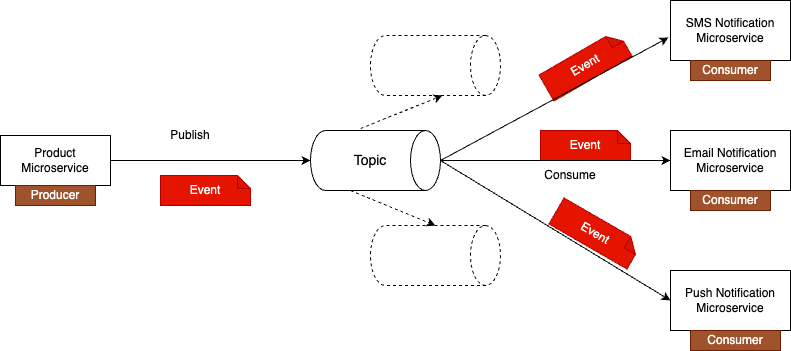

Topic is a place where Kafka stores all published messages. For example, If you see below diagram we have Product Microservice, And on the other side, we have three microservices a SMS Notification Microservice, Email Notification Microservice, and Push Notification Microservice. Each of these three microservices wants to be notified when a new product is created in our system.

So when a new product is created to notify these consumer microservices, the product microservice will publish an event. But instead of sending this event directly to a destination microservice, Kafka Producer will store this event in Kafka topic.

Topics are partitioned and each partition is replicated across multiple Kafka servers for durability. This way, even if there is an issue with one Kafka server, you still have your data stored on other Kafka servers. So all events published by products microservice will first store in Kafka topic, and then the consumer microservices those are interested in receiving these events, they will read these events from Kafka topic.

Lets understand the kafka topic in more details

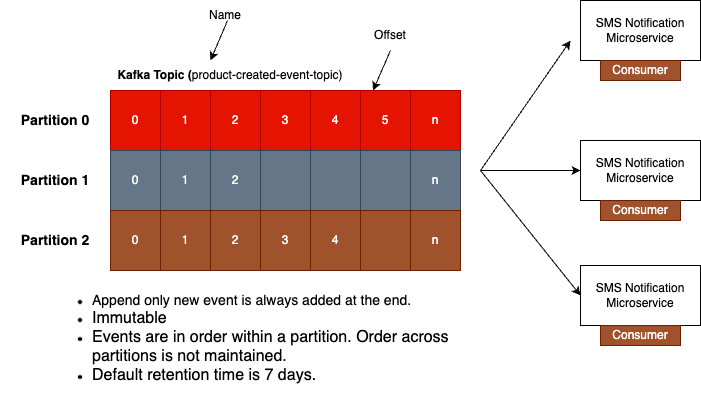

When working with the topic, you will refer to it by its name. So each topic that you create will have a unique name. If you see the below diagram the topic name created as product created event topic. The topic itself is split into partitions. As shown in below diagram the topic is split into three partitions. This is very helpful because consuming microservices can read data from topic partitions in parallel, and this can help us increase throughput, scale our application and make our system work faster.

For example, if we create a topic with more than one partition, we can horizontally scale our application and have a separate instance of the same microservice read from its own partition in parallel. And this way, events that are stored in Kafka topic are processed much faster than if you had only one instance of microservice running. If one instance of our microservices still busy processing one event, other instances can pick up other events from the topic and process them in parallel.

If you see the topic partitions in below diagram, those are created while creating a topic. Once the topic is created, you can increase number of partitions if needed also decrease the partition based on its usage. Now, the topic itself is a logical concept in Kafka, but each partition is actually a small storage unit that holds data and it is stored on a hard disk on Kafka server.

As shown in below diagram, topic has three partitions and each partition looks like a table row. Each row has a cells and this is where data is stored. So partition zero has five cells and each cell contains event data. Now notice that each cell in a partition is numbered. This number is called offset, this offset is very similar to index in Java array it starts with zero. The next event that will be stored to partition zero will be stored at index one, and every time a new event is stored to partition zero, its offset will increase by one.

So Kafka topic is append only and a new event is always added at the end. Now event data that is stored in a partition, it is immutable. Once you store event to a partition, you cannot change it, you cannot delete it, and you cannot update it. You also cannot insert events in the middle of partition. You cannot insert it somewhere between of existing events.

A new entry is always added at the end of the partition, and once the message is persisted, its offset is increased by one. If you notice in below diagram each partition in this topic has different number of numbered cells. This is because each partition can have different number of events stored within a single partition, like for example, within partition zero events are stored in order, but across partitions the order is not maintained. This is why it's possible that different partitions can have different amounts of events stored.

As we already discussed the event data that is stored in Kafka topic is immutable and that you cannot change it and you cannot delete it. Also, after the message is consumed from Kafka topic, it remains there and does not get deleted. It can stay there for as long as needed, but it does not stay there forever. By default, Kafka Topic is configured to retain events for seven days, but this value can change through configuration properties if needed. You can change this value and you can make Kafka topics keep data for as long as you need it